We recently hosted an Open Studio panel discussion about effective collaboration, and received a few audience questions on the topic which were both interesting and chunky. While the team touched on them in the conversation, we had a lot more to say so we've turned our answers into a series of blog posts - here's the first one.

Q: “I'd love to know at what point during a design journey you would identify the need to collaborate vs. work independently to achieve the best outcome. Working in a broader CX team, different people have their own thoughts on when to collaborate to build momentum/support vs. those who don't encourage collaboration until the outcome is perfect because they fear that we won't prove our worth until the end. As a natural collaborator with experience proving collaboration works, I find this approach frustrating so it forces me to take my own approach (ask for forgiveness later). ;) Would love to know your perspective.”

Paraphrased: When do you start collaborating?

TL;DR

Great question! We have a lot of ideas to share about this, including:

- While even the best ideas can be easily crushed, there is usually some strong, testable element somewhere. Stand back from your work, look for the testable core, and focus your collaborators’ attention on that. We’ll share some suggestions on how to do this.

- Do you need to convince people to collaborate earlier? Find out more about your collaborators and describe your approach in ways they’ll understand.

- Is vague or unhelpful feedback making it harder to develop a collaborative culture? Framing the feedback as questions is a great way to help with this.

The long answer:

This is a complicated question!

New ideas can be fragile, easily killed. It’s natural to want to protect them. On the other hand, many ideas are bad and probably deserve to die. So it’s a real dilemma!

Early-stage collaboration is interesting in that it’s almost indistinguishable from user testing. Like, at a certain point of germination you’re not “collaborating” so much as sounding out whether or not this other person even gets your idea. Do they like it? Do they understand it? Are they keen to work on it?

Seeing early collaboration as a form of testing is a good way to make it more palatable and clarify how that collaboration should work. The art is not in deciding when to test (the answer is always now!), it’s in deciding what to test and how to test it.

So let’s talk about collaboration as testing.

Fear of a false negative

The biggest fear we have in testing anything is the fear of the false negative: that someone will veto the idea but not because it’s bad but because they just didn’t “get it”. And they didn’t get it because of small elements that would have evolved over time anyway (a feature, the messaging, visual design).

And this fear is justified in that people really do dismiss great ideas for small reasons, so it’s important to sweat the details. But that’s the slippery slope that leads you into building features and designs that don’t work because you set off down a path cleared by your earliest assumptions.

The art of the test

While even the best ideas can be fragile, there is usually some strong, testable element somewhere. The heart of the idea, the core piece of logic about how it is supposed to make a difference in the world. Your job early on is to identify that core and figure out an appropriate test.

The keyword is appropriate. The test doesn’t have to be comprehensive or rigorous; it just needs to be appropriate for your needs. For many formative ideas, the tests can be very loose and low-fi. You can use sketches, prototypes, processes, but it just needs to be something tangible that expresses the testable core. And what you are looking for are strong signals on the dimension that you care about.

For example, we’ve been working on a youth-oriented mental health product that needs an animated guide-type character. A lot hinges on this choice of character: it affects branding, voice, structure etc—but because we’re amazing brain geniuses we had developed an unbeatable argument for which character design was the best choice.

Luckily, we’re co-designing this with a group of kids! We showed them some very sketchy rough initial ideas and they all hated our first preference, and instead, most of them picked an option that not only did we not like, but we were also pretty sure we couldn’t legally use, and had only included in order to make a point.

What do we make of that?

One way to look at it is, “We should have invested more work into the concepts: if only we had polished our favourite, or if we’d changed this or that detail, then the kids would have picked them!”

Another way to look at it is, “The users have spoken! The guide will be the chosen one, even though it doesn’t really make sense and might have legal issues!”

But the right way to look at it is, “What they most want is dimension X but they also clearly value dimension Y, and while the preferred option is not actually going to work for all sorts of reasons that they aren’t considering, we have this third character option over here that we originally dismissed but will meet all of the kids’ needs while also making sense in the app and avoiding any legal issues.”

The point is that while the feedback was definitely not what we wanted to hear, it gave us a much more accurate view of reality, and we could adapt and improve our ideas accordingly.

What if you don’t know what your testable core is?

Then run tests to find out!

I was talking to Sarah Mercer, one of our Client Principals and the head of our Melbourne studio, and she brought up the example of a strategy deck she’d been working on with a team. Some people were starting to feel lost. While some team members were super confident, others were losing faith in what they were delivering but weren’t sure how to improve it.

They presented a WIP to a few directors looking for any kind of impressions, and the response wasn’t great: we liked the analysis but couldn’t understand the recommendations.

That’s painful—nobody wants to work hard, feel like you’ve reached the end, and then be told you need to go back to the drawing board—but it was also data that the team did not have, and they were never going to get it working in their own group until delivery day. They had to engage outsiders to get the feedback.

What they were really testing was the clarity of thinking. That was the testable core: does an audience follow this logic? The answer was no.

Happily, in this instance the core thinking was good, it was just weakly communicated. Sarah and the team were able to step back, look at their own work with fresh eyes, and find new structure, order and framing so that their content made sense.

This is about formative collaboration

To reiterate: we need to test ideas early and often so that we can uncover knowledge about the world. We are afraid of false negatives, so we defensively overbake the idea, whereas we should be putting our effort into identifying the part of the idea that needs testing and devising the right kind of test to get meaningful feedback.

Testing is not collaboration, but it’s analogous. In that sense, it’s all about getting input and investment from others on an idea, which is also the essence of collaboration.

How to convince other people to collaborate/test earlier

Two complementary options!

First, create positive experiences.

People will do what feels good, and so if you can engineer collaborative moments in early stages that give good feedback and insights and genuinely create a good vibe, then your coworkers are more likely to want to do the same thing.

Second, describe collaboration in a way that aligns with your prospective collaborators’ values.

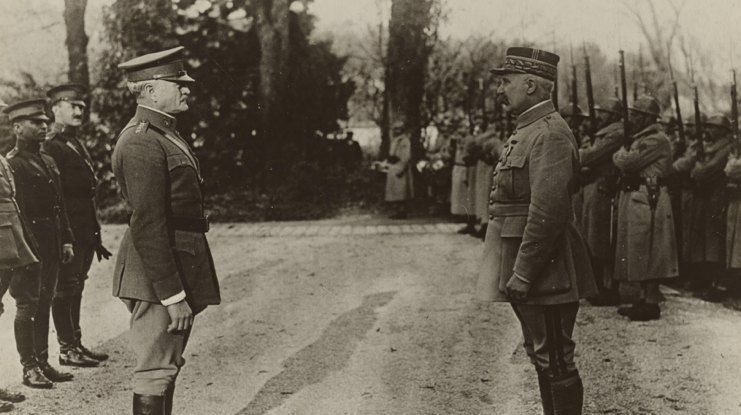

For example, sometimes we work with organisational leaders who value order and responsibility. It’s a natural reaction to being accountable for an organisation that is larger than they can know, but those values can squash agility and responsiveness. So I’ll sometimes refer to the military because it’s an archetype of order and responsibility but if you look inside you’ll find all sorts of alignment with agile/lean/HCD-type practices.

These references are ways of suggesting that while early-stage collaboration and testing might feel messy and stressful, they are in fact responsible, professional, brave things to do, the kind of things people in the military would do—and for some people that can help build the confidence to make decisions that might feel unfamiliar or risky.

Of course, not everyone responds to military references. For someone who is more tech-aspirational, a different approach might be to reference Y Combinator, the startup accelerator behind Reddit, Stripe, Dropbox and Airbnb. For example, YC argue that the most important question for any startup to ask in the early stages is, “How many customers have you spoken to this week?”

It’s a simple question, but it’s their number one question to ask—and it’s fundamentally collaborative. (If the answer is zero you have a problem, and if the answer is anything else, you will have good information to use. The higher the number, the better you are probably doing.)

It’s another good way to suggest, “If you want to be like X, then here’s how they approach Y…” and again building that willingness to try something new.

Sarah Mercer, the head of our Melbourne office, had a good note on this question:

For people who aren't in a position to change culture or feel daunted, the other option is to clearly frame how you want others to frame feedback when you share your fragile idea. I often forget this in the heat of presentation. Asking people to frame any feedback as a question is a good technique for these situations where the power dynamic is really out of whack. (For example - “Don't say 'I don't think that element is working for this audience', say 'Can you describe how you see that element working with this audience?'” can help flip the dynamic).

For more articles and information on collaboration, check out the LiquidCollab.